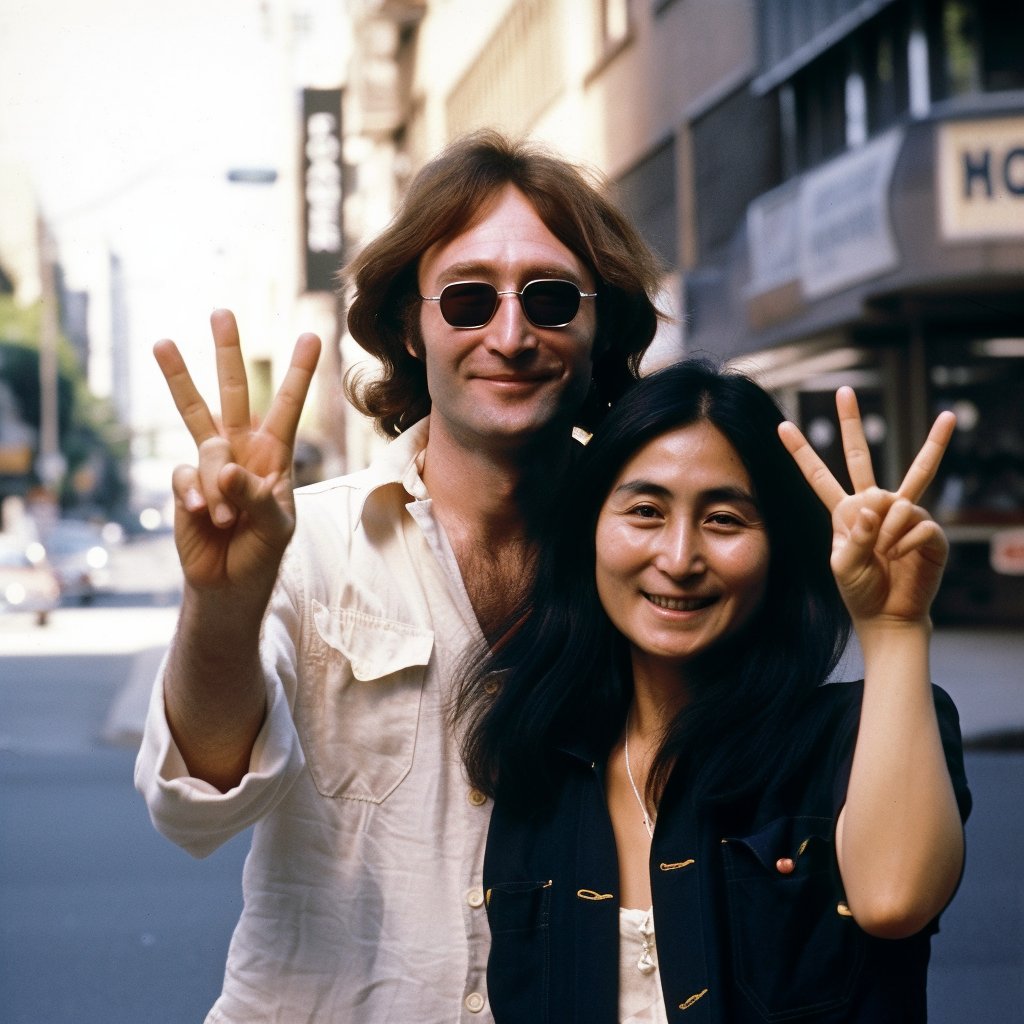

Pope Francis in a giant jacket in the street, Vladimir Putin kneeling in front of the Chinese president ... You have surely already seen some of these improbable images on social networks! These visuals, more true than life, are actually generated very easily by sites accessible to the general public. Midjourney, Dall-E 2 and Stable Diffusion are the best known. They use automatic learning to make images from the multitude of data available on the Internet.

This ability to create and reproduce reality often causes confusion among Internet users. If it is not always possible to clearly distinguish a product generated from a real photo, tips still allow you to see a little more clearly.

AI or not? Here are some tips to help you!

Follow up 📰

Some surprising images only make sense once put back in context. Ask yourself. In what context what I see is?

In November 2018, former Gabonese president Ali Bongo Ondima disappeared from radars for several weeks. In the country, rumors of his death circulate.

Hospitalized on October 24 in Saudi Arabia following a stroke, Ali Bongo reappears on TV on December 31 , part of his paralyzed face. Following speculation, many Gabonese then believed in a Deepfake , generated by IA.

Check that it is not indicated ✅

Thanks to " watermarking ", in English: a discreet logo or a watermark may appear directly on the image. A mention can also specify it in the legend or description of it.

Find the details that do not stick and develop your critical mind 🧠

When it created images, generative AI has difficulties with certain visual details. It sometimes causes inconsistencies, visible to the naked eye!

In addition fingers, less limbs, fuzzy faces and other anomalies ... Within an image, an element on a body can come out partially distorted or in a bizarre way. In the near future, AI will no longer make these errors.

Go back to the source of the image 🖼️

If in doubt, do not hesitate to carry out an inverted image search to find any trace of your product on the net. Generative AI tools mainly use public databases!

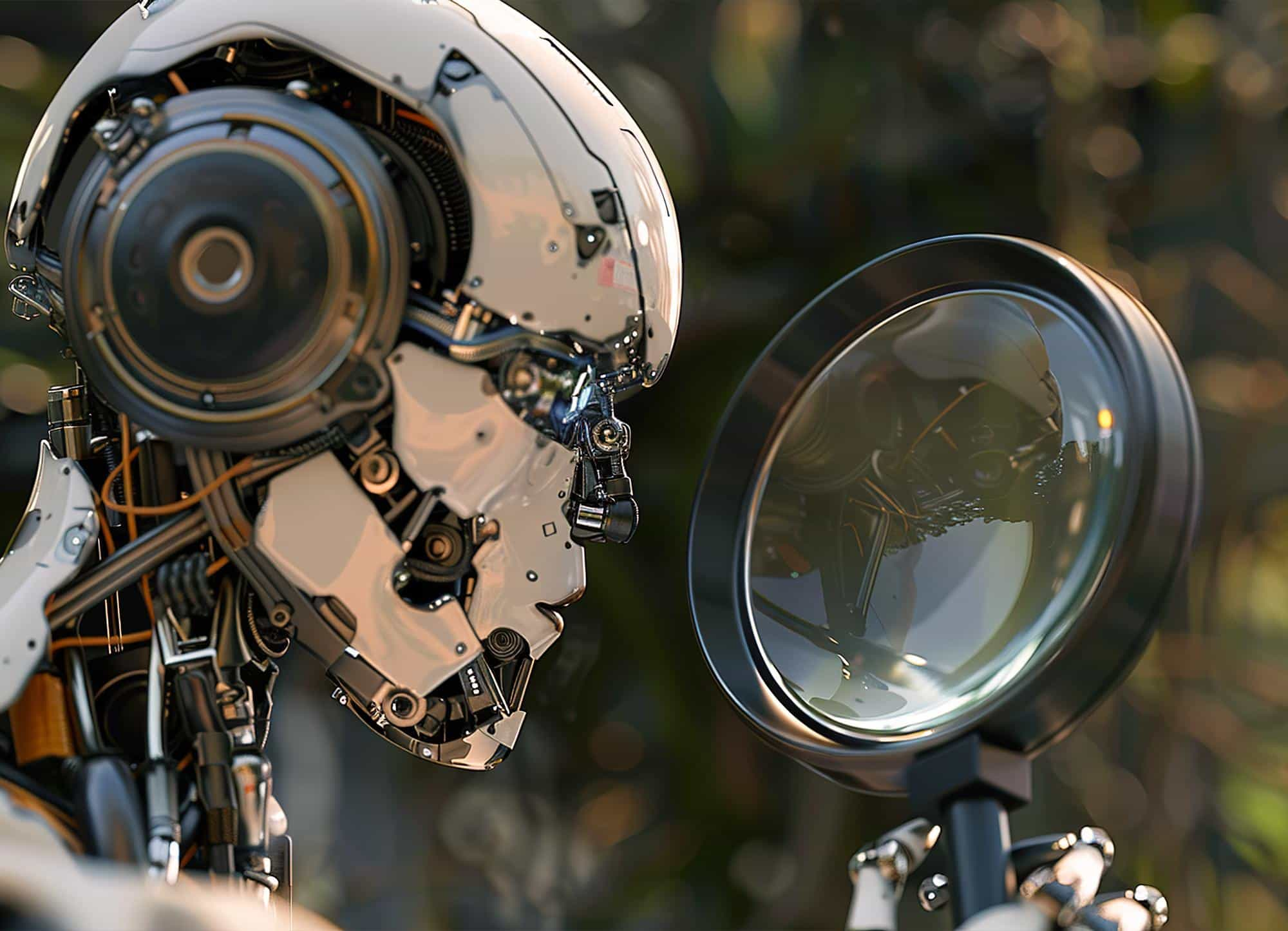

Use AI against AI

This technology is quickly perfected. It is possible that in close time, the design defects of his images and videos are no longer perceptible to the naked eye. Retrouched, they can already seem very credible and, therefore, be manipulated for disinformation purposes.

Fortunately, tools are already developing to detect this content and offer a start of solution. This is the case of Synthid, launched in September 2023 by Google. Still in beta version, the platform remains inaccessible to the general public for the time being.