Sentimental scams also called “ love scam ” have been around for a long time and often make the headlines. These scams are not lacking in examples. Whether it is crooks on Tinder or the inheritance scams, all these frauds have one thing in common: concealment! Many people use photos of real people by pretending to be extorting money from them.

However, very recently he had a case, masterfully known worldwide, which perfectly illustrates this scam: it is the fraudulent and sentimental disappointment that Anne lived in her flesh. This 53 -year -old Frenchwoman was defrauded by $ 850,000 by a false Brad Pitt. The victim told his sad experience on the French television channel TFI thinking that the latter was the real international star. Anne explained the reasons why she transferred $ 850,000 to the false Brad Pitt. She believed that he was suffering from a kidney disease and that he could not access his accounts due to the current divorce procedure .

A family affair

In this singular story, in February 2023, Anne would have been approached and contacted, via Instagram, by a person who claimed to be Jane Etta (Brad Pitt's mother). This false mother would have told Anne that her son "needed a woman like her". The next day, the false Brad Pitt entered the scene and he contacted Anne by testifying to her: "My mother told me a lot about you".

Over time, they started to build a “digital romance” and without the slightest suspicion, Brad Pitt spoke of his situation and began to make subtle financial requests. First of all, he tried to send him luxury gifts but, because of his divorce procedure with actress Angelina Jolie, he could not pay customs duties because his accounts were frozen. Despite certain doubts, Anne ended up being convinced by declaring: "Whenever I doubted him, he managed to dissipate them". This is how Anne transferred the tidy sum of 9,000 euros for imaginary gifts.

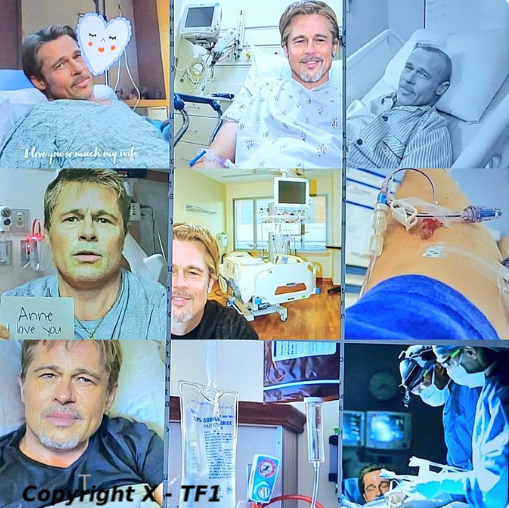

Then, the financial requests of the false Brad Pitt took another nature when he asked for money to finance his treatment against his kidney cancer. The crooks then sent the victim to the photos of Brad Pitt. These photos, generated by artificial intelligence, presented the American actor lying on a hospital bed which proclaimed his eternal love to him. She said, "I looked for these photos on the internet but I did not find them, so I thought it meant that he had taken these selfies just for me".

Moreover, this hospitalization has strengthened its intimate link with it. Returning cancer herself, Anne had the impression of saving a life: "I told myself that I may know the life of a man".

Watch out for Deepfake!

It was then that Anne has gathered all her savings, sending 830,000 euros to her alleged idyll who fights against kidney cancer. Anne's daughter, then 22 years old, made all her efforts to convince her mother that it was not the real Brad Pitt, the girl declaring on the TF1 channel: "It's very hard to see how naive my mother was". Pain lost, Anne was completely convinced by the images generated by AI.

However, the relationship took another turn when photos of Brad Pitt and his new girlfriend, Ines de Ramon , circulated on social media. The victim was very disrupted but the crooks succeeded created a false press article in which Brad Pitt spoke of his "exclusive relationship with such a wonderful person ... named Anne". This false publication allowed the crooks to regain the confidence of the victim.

After all these turpitudes, Anne realized that her digital relationship with the alleged Brad Pitt was actually pure usurpation. She managed to put an end to this devastating sentimental relationship. Despite this, the crooks not backing before nothing, tried to extract money from him by pretending to be a certain John Smith (special agent of the FBI). Anne made the decision to call on the police in order to file a complaint. From now on, the victim lives with a friend. His life is broken and destroyed by the crooks: “My whole life comes down to a small room with a few boxes. That's all I have left. ” In her distress, she tried to commit suicide three times.

This story has become viral with a global impact given the importance of the sum of usurped but also the technique used. The victim has been the subject of strong reactions, including many mockery.

Toulouse FC, a French football club, made fun of it by posting in a tweet , today erased: "Hello Anne, Brad told us that he would be at the stadium on Wednesday for Toulouse-Laval. And you ? ». Netflix-France took advantage of this unfortunate incident to promote films with Brad Pitt, writing "Four films to see for free with (the real) Brad Pitt".

Although many people have made fun of Anne, others on the contrary have underlined the usefulness of her testimony. A popular post on X relayed this comment:

“I understand the comic effect. Nevertheless, we are talking about a woman in her fifty years who was ripped off by Deepfakes and IA . Think about your parents and grandparents how they would have acted in such circumstances! ».

A column, published in the newspaper Liberation, qualifies Anne as a warning launcher: "Today, life is paved as cyber-rooms ... and the progress of the AI will worsen this scenario". It is certain that many people have experienced the same situation as that of Anne; Sometimes shame and stigma linked to fall into this type of scam, prevent the elderly women from testifying.

Anne also declared in a popular French program that she was not "crazy or fool" while recognizing: "I made myself have, I admit it ... That's why I wanted to testify, because I am not the only one".

Indeed, Anne is not the only one to have had this mishap. It turns out that two Spaniards were also victims of the " Deepfake " scam by Brad Pitt. One having paid 175,000 euros. As for his partner, 150,000 euros was stolen from him. Still in this same kind of scenario, Nikki Macleod, a retired Scottish speaker, was also the victim of a false photo and false videos used to extract 17,000 pounds sterling. This woman of seventy-seven years, originally from Edinburgh, said: "I am not stupid, but this person managed to convince me that we were going to spend our lives together."

How fraudsters exploit their victims ?

Social media is not at the origin of love frauds, but the extent of its fraud is due to social media. A few clicks are enough to reach people located for miles and/or in different countries. These crooks are not content to steal your money; They endeavor to steal your heart by first making you incapable of resisting their disproportionate financial requirements.

According to the Data Spotlight , "nearly 70,000 people reported a love scam and the declared losses reached the record figure of $ 1.3 billion in 2022". These crooks do not touch the jackpot by chance. They study their victims and create characters, mainly targeting the elderly who do not necessarily master IT. They exploit their loneliness and affective vulnerability. They take advantage of their emotions, their intelligence and especially their money. The profiles, of these victims, are multiple but often have a common point: they do not master the tool tool.

The crooks strive to gain the confidence of their victims by constant communication. False promises and conversational modes of the semi-interrogation type are all tricks to skillfully dominate their victims. The first contact is always established via digital platforms (mainly meeting applications or social media platforms) with photos of an attractive person. Once the communication is made, they strive to gain the confidence of their potential victim, then pass it on to a more private platform, generally WhatsApp or Telegram.

Besides, these online crooks always have reasons why they cannot physically meet their potential victim. They are trying to save time in order to get more personal information.

They also have already existing scam models that they can use for different types of people depending on the information they have been able to collect. For example, if a potential victim said he lost a family member, a friend or a spouse because of cancer, sooner or later, his online friend will be reached of cancer and will need his help. Having lost a loved one because of cancer, these victims give in to their emotions and then to their requests. This spiral goes so far as to exhaust their credit cards, sell their house and spend all their own funds to save their alleged friend. When the victim is no longer able to provide additional funds, online predators resort to blackmail and threats, generally leaving their victims broken financially and emotionally, which leads to trauma that can go as far as suicide. From now on, these crooks also use artificial intelligence to generate images and videos that seem real and almost undetectable.

, LOTI , a startup specializing in digital defense, in particular to fight against "Deepfakes", told Fox News Digital that scams involving the use of images and videos "Deepfake" are "increasingly common".

How to understand a video of a Deepfake generated by AI?

Like all that is false, the Deepfakes have several inconsistencies and vulnerabilities that distinguish them from the original. However, the photos and videos Deepfake evolve every day, which makes the exercise more and more difficult to distinguish the true from the false.

To date, the appreciation and detection of a Deepfake photo/video can be focused on high definition and the details of the face, eyes with or without blinking. The complexion of the skin can have saturation with fuzzy rear plans. Facial expressions can be distorted and not coordinated on the lips as well as on the voice. The hands and fingers can be deformed and shadows or lighting can be deformed. The shape of the ear can change from one image to another, the AI strives to maintain a certain coherence. Jewelry such as glasses, earrings or other accessories can vacillate or appear misshapen. Movements and body language can also be jerky, robotic or rigid. Members can be disproportionate to the body of the individual. The sound and background noises are generally audible and the voice is somewhat robotic, with discrepancies and accents or unnatural intonations. The audio can be slightly offset from the video, which results in unnatural lip movements and poor audio synchronization. A great disparity between the voice and the movements of the body can also indicate a deepfake.

What are the Deepfake detection tools?

The meticulous examination of each image of the video and the search for indicators above can reveal anomalies.

The use of tools such as fotoforensics to search for inconsistencies in the metadata of the file is an effective means.

The search for inverted images via Google Lens or Tineye can also help determine whether the original cloned image appears elsewhere.

AI detection software can help report Deepfakes like "Deepware scanner", "Microsoft Video Authenticator" or "Sensity AI".

Charity Ani Kosisohukwu