Indeed, this new technology could cause significant damage in countries where digital culture is still very low or limited to distinguish the true from false. What should be known about this new technological disinformation trend?

The new art of sowing doubt

Deepfake is based on manipulation of video images. Indeed, videos, sounds or words are made using algorithms under the field of artificial intelligence.

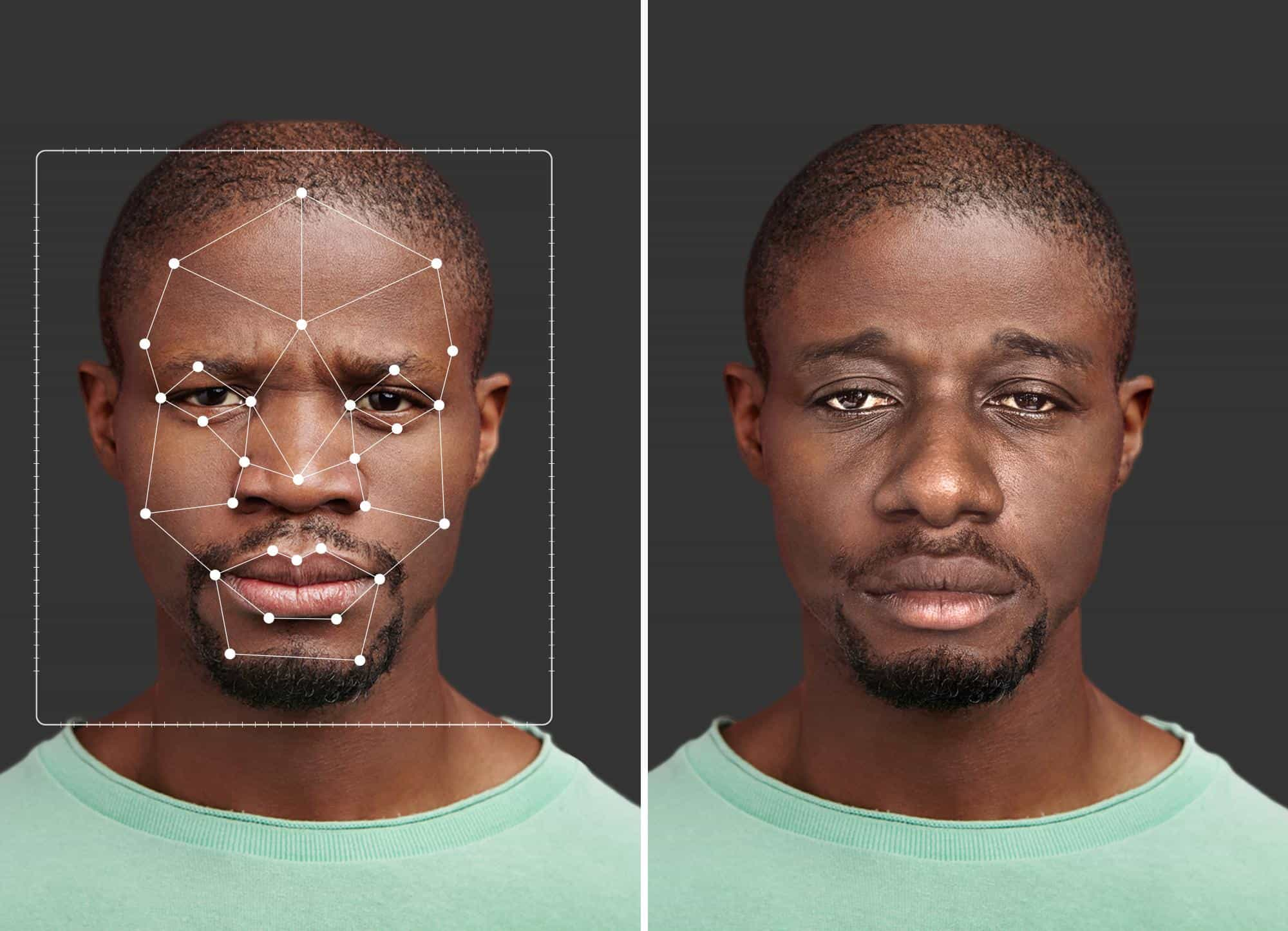

Deepfake raises several problems. First of all, the danger of the Deepfake is to suggest that behind everything, manipulation is possible and that it may be impossible to detect it in the future. For example, Deepfake are able to digitally graft one person on another. However, these perceptions can sometimes be almost imperceptible in the face, voice or body because audio and visual quality are constantly improving. Deepfake detection becomes very difficult, this new disinformation technology is a massive illusion weapon because it is able to also deceive the most experienced observers.

One of the other major problems is that some political candidates and their supporters, certain companies or individuals use this technology to make public figures, organizations or governments of the words they do not say. In the worst scenarios, this can create political, diplomatic, economic, social or even health incidents.

Finally, some crooks can use Deepfake to usurp identities, in order to extract money or information. There is an increased risk of cybercrime.

With Deepfake , many audiovisual information content can be questioned. But, if we can no longer trust what we see, how to believe what is presented in the media or on social networks?

Faced with the phenomenon of Deepfake, what can we do?

How to defend yourself against videos that seem more true than life? How can we be sure that it is not a manipulation used for political purposes, to destabilize the state or perhaps strengthen a lie?

Despite the advances, the Deepfake still remain complicated to achieve. For example, the rate of beat of the eyelids is one of the main clues to detect Deepfake . Indeed, the movement of the eyes is sometimes desynchronized by those of the rest of the face. In addition, most Deepfake are designed to work from the front. Errors may appear when the person puts himself in profile. The ears or glasses deform. There may be inconsistencies or contrast problems in different bodily regions. Also observe the movements of the mouth and lips to see if they synchronize when they emit a certain sound.

The good practices of the Deepfake

For prevention, here are other tips to avoid falling into the Deepfake and more generally fake news :

✅ Check the declaration. Ask yourself if what is said is reported as is and in what context it is announced.

✅ ask for evidence. After having established what has been exactly said, contact the person or institution when possible to ask him to ask him for the evidence to support her assertion. This gives the possibility of supporting your declaration.

✅ Consult other sources. Check other sources open to the public and more reliable on the subject. This may include surveys, research, archives, experts, scientific journals, etc.

✅ Observe the details. The title, dates, information structure ... For example, if the article does not mention specific dates or places, you must look at the information with more vigilance.

Conclusion

As you can see, on the web and on social networks, everyone can create information. It is therefore essential to check their reliability. If in doubt, avoid "like" or share so as not to give more visibility to the Deepfake and Fake News.

Discover here an article on the theme of African journalism in the face of the right to the truth.