AI poses a number of ethical challenges. For example, manipulation of human behavior by algorithms, violation of privacy via storage and use of personal data. The lack of veracity of generative AI or algorithmic discrimination are other notable threats.

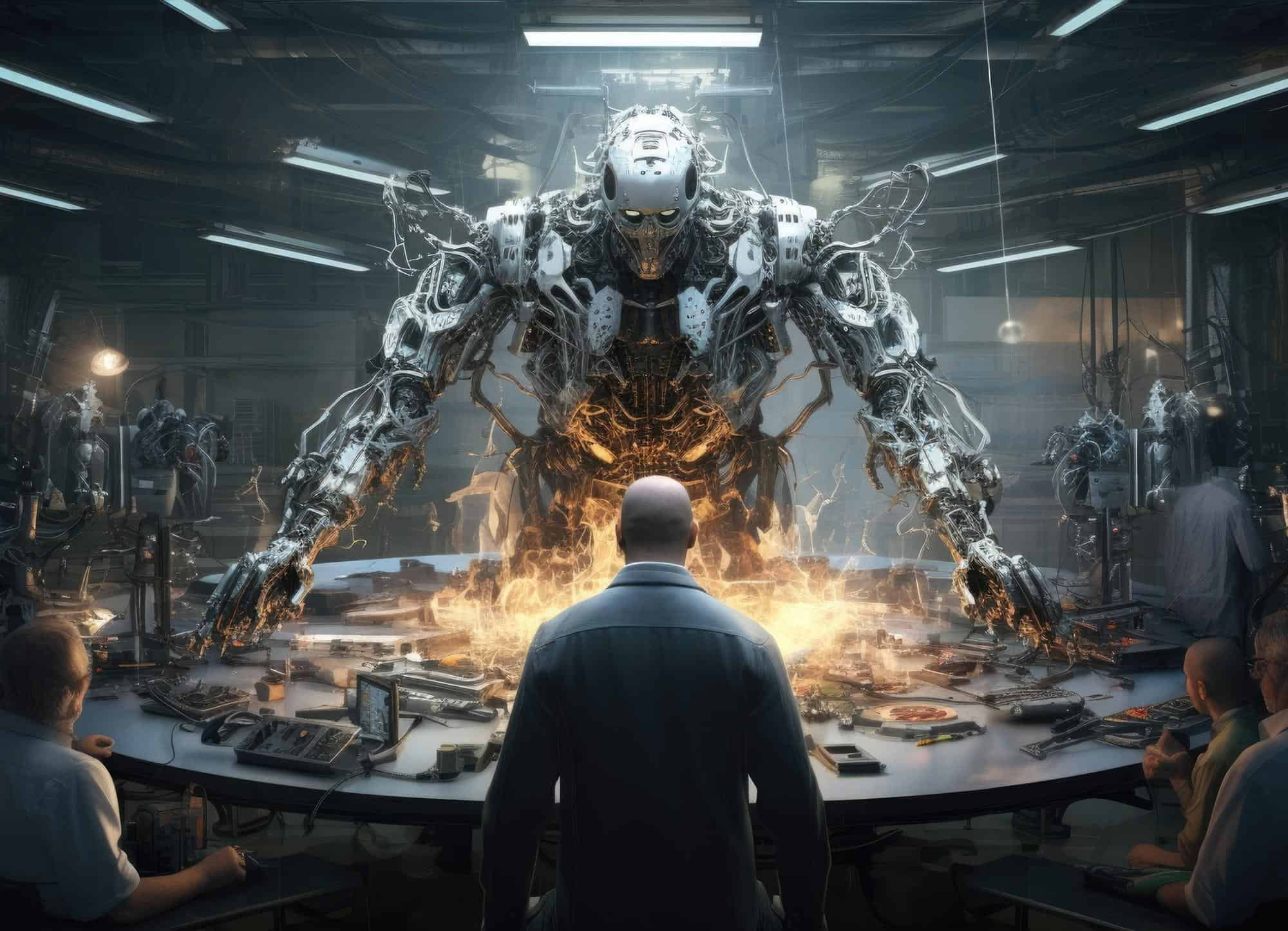

Lewis Griffin is a computer researcher at the University College London (UCL). For him, "the expansion of the capacities of technologies based on artificial intelligence (AI) is accompanied by an increase in their potential for criminal". It distributes each of the identified threats of the AI. This puts them in a list classifying them in order of potential damage and ease of implementation. The aim is to assess the risk of criminal use.

AI flirts with manipulation

Disinformation represents one of the most serious threats of AI. In question: its potential for influence, on a political and societal scale. Fake news traveling on social networks and the use of Deepfake participate in this disinformation . By the work force it represents, AI is the best way to generate and circulate a large number of false information. These are created to appear credible and circulate effectively in a short period of time.

Nowadays, there are many AI tools capable of performing this kind of tasks independently and spontaneously. Some are quite competent to write false press articles. GPT2, for example, which is none other than Chatgpt's "little brother". He would be able to create " Deepfake for Text " and share them himself on social networks. Obviously, this massive generation of "plausible" content can have an immense impact. When a publication "floods" Internet (Flooding) on a large scale, it is often perceived as truthful by public opinion, guaranteed to be safe from all influence.

AI has the power to manipulate information as its perception. Admitting good tools to learn to recognize your product is a key to intellectual independence.